| < Mainboard / CPU / RAM | Home | Power and Cooling > |

Building WOPR: A 7x4090 AI Server

Risers

In short, risers suck.

Like, a lot.

The reason we require risers for this build is that while we have 7 PCIe slots, they're at 1 slot spacing. That's no good for the 4090s which occupy 3 slots on their own, nevermind getting airflow between them.

So we require some 'extension cables', aka risers.

One good thing that came out of crypto, people got good at hooking up lots of GPUs to motherboards, so lucky for us there are a lot of risers in the market.

Unfortunately, they're not all created equally. If you want to run PCIe 3.0x4, ok — you can get by with an eBay special. But if you want to run PCIe 4.0 without errors, then that's going to take good risers with low signal attenuation.

Initial Tests and Errors

When I started this project I stopped in at MicroCenter and bought a couple 900mm Lian Li risers. This represented the worst case scenario in my mind, so testing with those would validate the idea before I went off and purchased a load of GPUs (I had two 4090s hanging off a Threadripper already). I took them home, plugged the cards in, saw everything on nvidia-smi and nvtop and thought I was good. I had some weird stuff showing up on the screen which I dismissed as the cards appeared to be working (for inference anyway) but eh, let's gooooo.

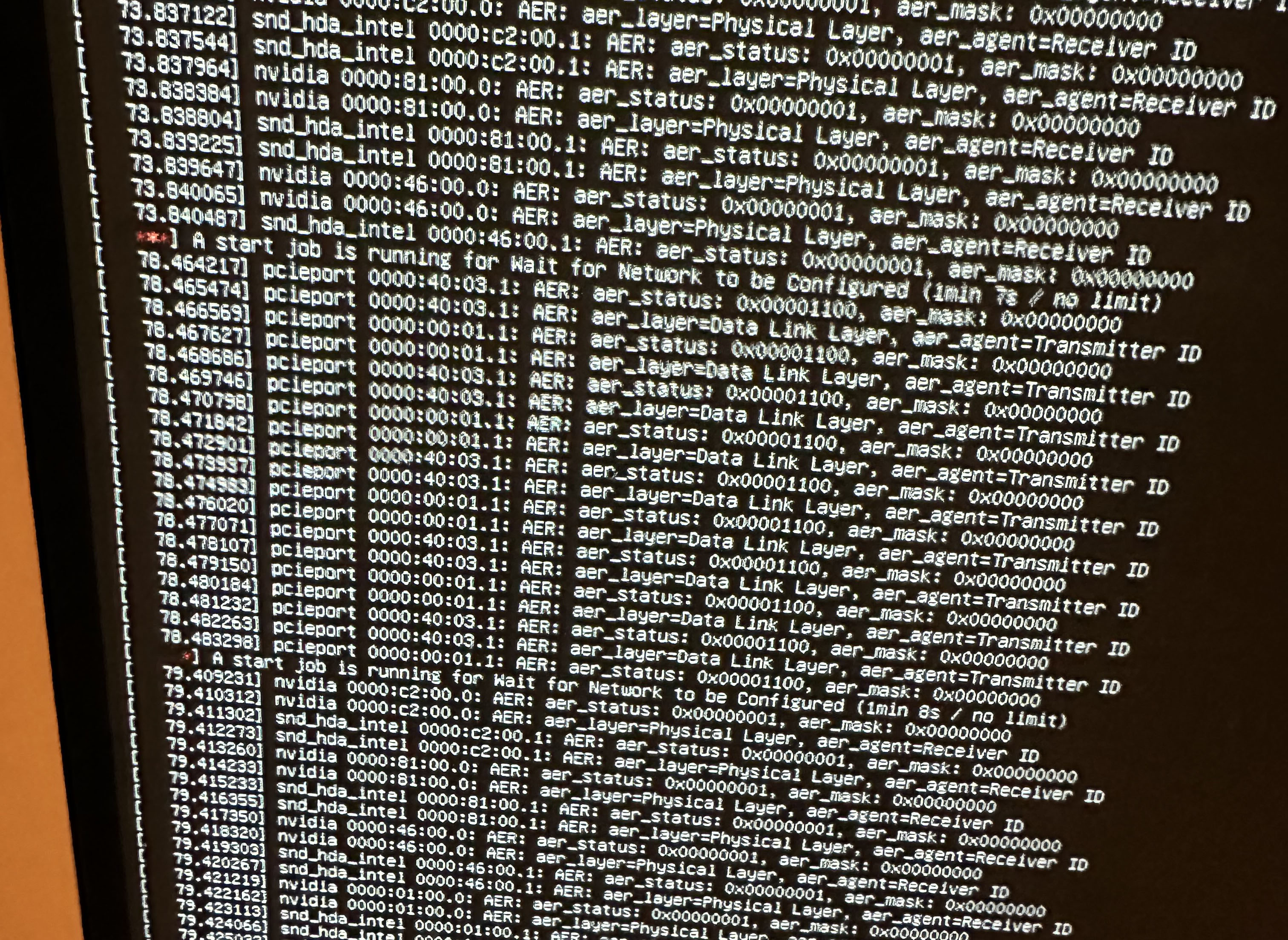

I got on Amazon and bought some risers of various lengths that claimed to be "PCIe 4.0". I picked up another five 4090s. I bought the mainboard, CPU, RAM, and so on. I assembled the rig, plugged in risers and cards, booted and WTF:

Those errors started spilling down the screen as soon as Linux brought up PCIe. Guess those errors from before weren't something to overlook.

Time to read about PCIe. Turns out the errors were from something called AER - Advanced Error Reporting. You can learn about it in the kernel docs: https://docs.kernel.org/PCI/pcieaer-howto.html

There was more detail in the dmesg, showing errors along with decoded error masks. Lots of things like "MalformedTLP", et cetera. Basically, transaction layer packets (TLPs) not getting where they should, how they should, when they should - like you'd predict if you have physical cabling that's not up to spec. All the errors I saw were "correctable", which means that just like in other interfaces like Ethernet, the data link layer noticed an issue and managed to retry until the packet went through.

It's great that the errors were corrected, but you get that many and it's not good. Imagine going to the grocery store, coming home, and half the groceries are gone. So you go back, over and over until you finally get home with the groceries. With PCIe our trip is a LOT shorter (they run at 16 gigatransfers per second) but if a significant portion of our trips have errors it could be noticable. The data still gets there in one piece but performance will be affected.

That evening was a little rough. I had seven 4090s along with a lot of other not-cheap gear, and it wasn't working.

Moar Risers

The obvious suspects were the risers, so I turned all the slots down to PCIe 3.0 thinking the slower signaling would be more robust. And it was. No errors.

OK. Well, if I had to run it at PCIe 3.0 it'd still be just great for inference at least. Training could happen with some performance impact, but I really wanted to run this thing at PCIe 4.0. So I started ordering risers. Different brands, different lengths.

I spent a lot of money on risers, so you don't have to.

A lot didn't work, but one set stood above the rest.

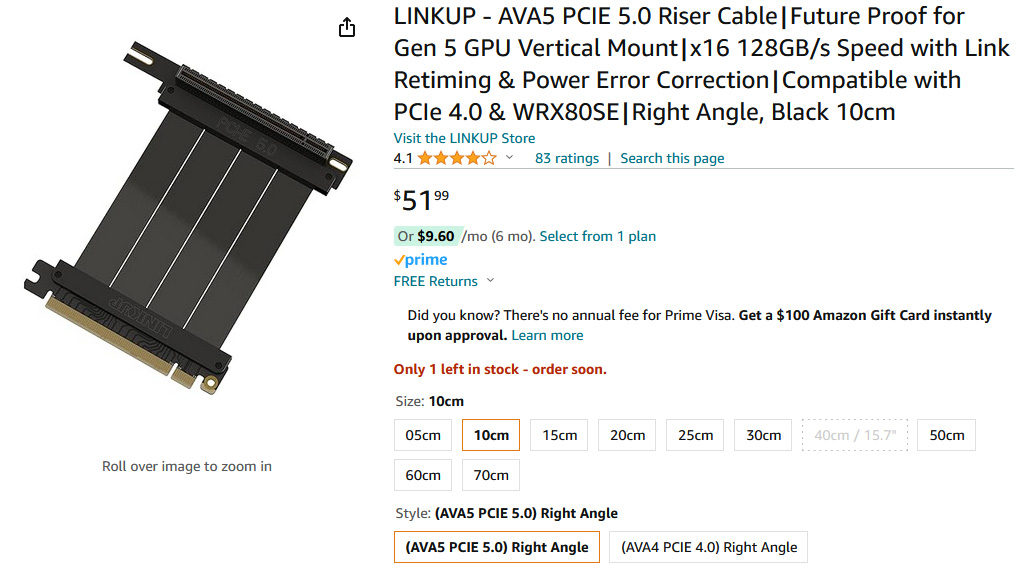

Behold, the LINKUP - AVA5 PCIE 5.0 Riser Cable. Links at the end of this writeup, but continue reading because there's more.

If you go look at this thing online it advertises "Future Proof for Gen 5 GPU" and "128GB/s Speed with Link Retiming and Power Error Correction". I'll get to what retiming is in a little bit but that really caught my eye. In a suspicious way. When they showed up, I took the little shields off the ends of the cable (they look like strain relief but they're not) looking for chips. Spoiler alert, these are absolutely NOT retimer risers.

But you know what? They work. With one caveat.

The cursed Slot 2.

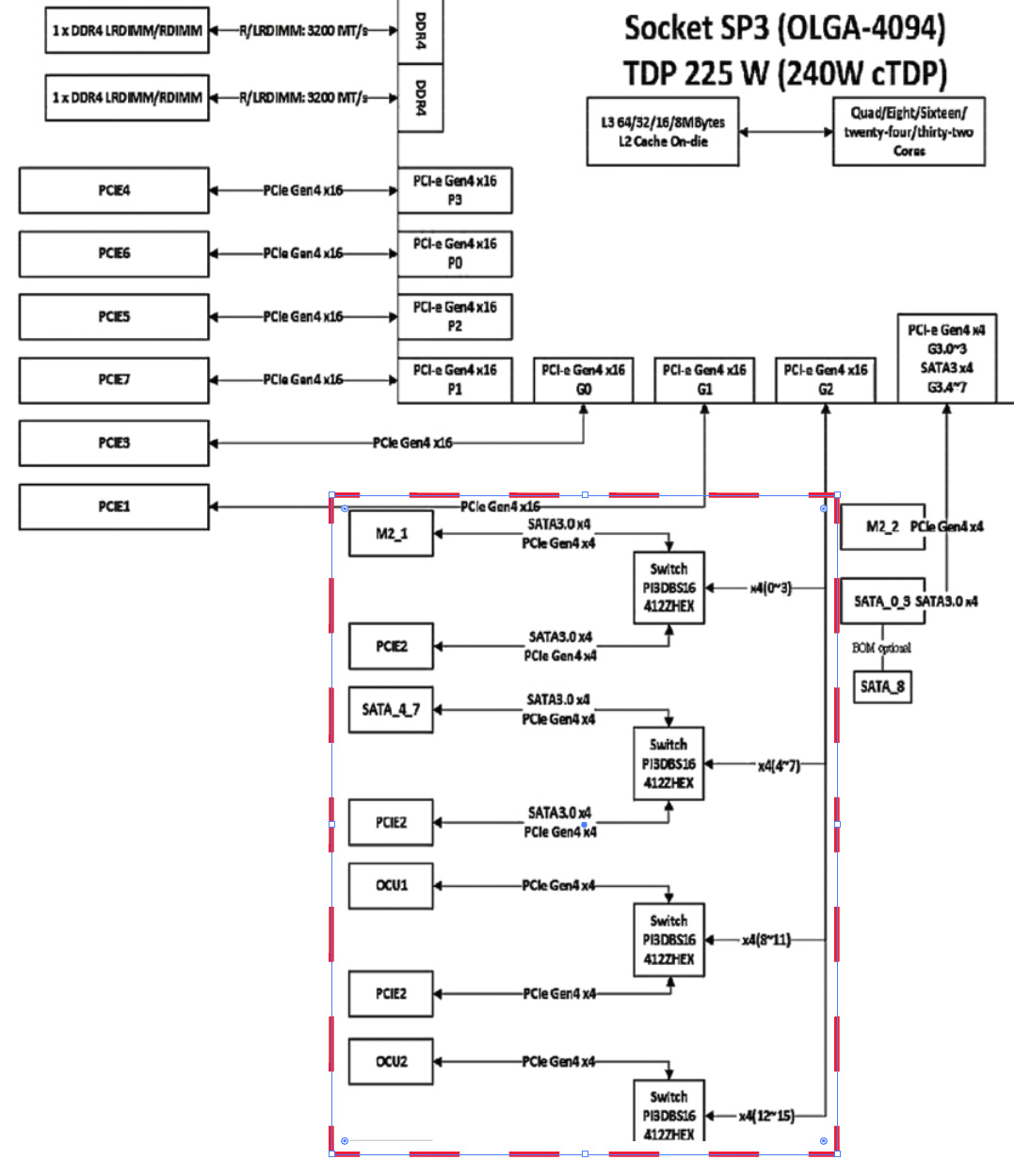

While testing risers I couldn't figure out why this stupid slot was misbehaving. It was one of the shortest risers but had the most errors. I tested many risers, still no good. But then I got suspicious and went looking at the motherboard's block diagram. Do you see the issue? I outlined it in red for you.

As mentioned, the AMD Epyc has 128 lanes of PCIe 4.0. 7 slots at 16 lanes per slot is 112 lanes. That leaves 14 lanes for other things. 8 lanes for the two SATA controllers, 8 lanes for the OcuLink controllers, and 8 lanes for the two M.2 drive slots. Wait, that's 24 lanes. Uh oh.

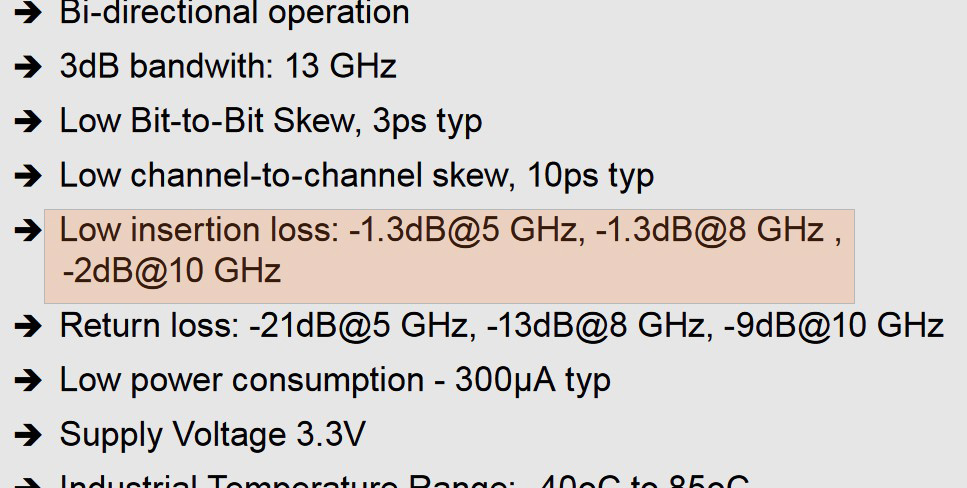

So clearly some of these devices are sharing lanes. Kind of. What they did is provide a series of onboard switches (PI3DBS16 from Diodes, Inc) to allow the user to swap lanes between PCIe Slot 2 and the second M.2 and SATA devices along with the two OcuLink controllers. Configuration is done with an onboard jumper, easy. But this comes at a cost. Check the datasheet on the PI3DBS16:

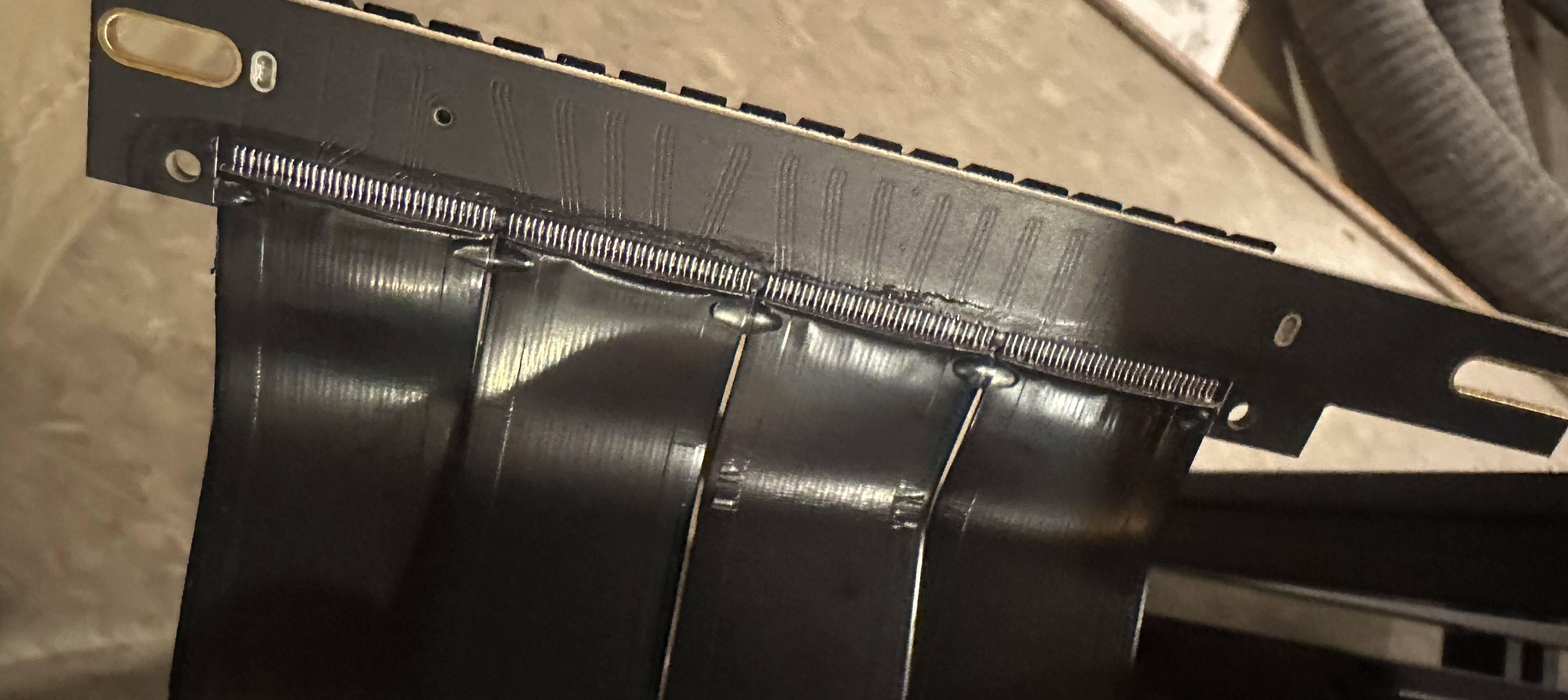

See that? LOSS. PCIe is a very high speed bus, and it can only tolerate so much signal loss before it stops working well. And we're stacking the riser loss AND the switch loss. That makes Slot 2 really unreliable with the usual risers.

But there's a solution. It's not cheap, but it works well. Really well. Enter the "C-Payne PCIe gen5 MCIO Host Adapter x16 to 2* 8i - retimer". This thing uses a PCIe retimer chip which is, for all intents and purposes, a repeater. It participates in the PCIe bus and performs similar duties to the CPU's PCIe controller in that it negotiates the link and provides other housekeeping duties. Card talks to retimer chip, retimer chip talks to CPU's PCIe stuff.

This is in contrast to a redriver, which is basically a dumb amplifier. It's possible that a redriver could work in this particular situation, but retimers are a lot more bulletproof as they essentially give you a whole new signal down the riser to the card. Downside? It'll run you $500 for a single slot. But it works.

Results

So the upshot of all this is, with the C-Payne retimer riser in Slot 2 and the LINKUP risers everywhere else, the system runs well.

I'm training models.

Disclaimer, again:

While no errors was my goal, my system is still not completely 100% errorless.

For inference, no errors. For plain DDP training, I haven't noticed any errors either. BUT, while running using something like Deepspeed ZeRO-3 I do get the occasional correctable error. That's because a lot more data is getting pushed on the bus with something like ZeRO-3.

I haven't narrowed down which slot yet, but I don't think it's Slot 2. For a recent test on a full finetune of Mistral 7B using ZeRO-3 I get maybe a dozen(?) errors over the course of a 20 or 30-minute run. But I don't notice any actual hiccups watching the training itself and the resultant model worked great. I'm planning to leave it for now, but if I get around to it maybe I'll throw another C-Payne card into the offending slot.

I also want to say that there are a lot of variables with this. Board manufacture has some tolerance, et cetera. So: Your Mileage May Vary. You're not buying an enterprise-grade rack full of servers that have been through a testing lab. This is cowboy stuff. If you have issues I'm glad to try and help - just drop me a DM on X. But don't hold me responsible if you follow my path and aren't satisfied. You've been warned.

| < Mainboard / CPU / RAM | Home | Power and Cooling > |

| Nathan Odle - <nathan.odle@gmail.com>@mov_axbx on X |