| < Home | Risers > |

Building WOPR: A 7x4090 AI Server

Disclaimer

This system is about as DIY as it gets. If you're reading this, I imagine the idea of this much GPU horsepower for the money sounds pretty good. But if this system is going to be mission-critical you should at least consider other options. I would recommend looking at tiny box, George Hotz's product. Tinybox is a commercial product, presumably with some level of support. It's slated to run either 6x AMD 7900 XTX GPUs or 6x Nvidia 4090s and is currently priced at $15k for "Red" (AMD) or $25k for "Green" (Nvidia).

If you're still reading: while no PCIe errors was my goal, my system is still not completely 100% errorless (but I'm pretty sure it can be).

For inference, no errors. For plain DDP training, I haven't noticed any errors either. BUT, while running using something like Deepspeed ZeRO-3 I do get the occasional correctable PCIe error. That's because a lot more data is getting pushed on the bus with something like ZeRO-3.

For a recent test on a full finetune of Mistral 7B using ZeRO-3 I get maybe a dozen(?) errors over the course of a 20 or 30-minute run. But I don't notice any actual hiccups watching the training itself and the resultant model worked great. I'm planning to leave it for now, but if I get around to it maybe I'll throw another C-Payne card (detailed on Risers page) into the offending slot which should sort it.

I also want to say that there are a lot of variables with this. Board manufacture has some tolerance, et cetera. So: Your Mileage May Vary. You're not buying an enterprise-grade rack full of servers that have been through a testing lab. This is cowboy stuff. If you have issues I'm glad to try and help - just drop me a DM on X. But don't hold me responsible if you follow my path and aren't satisfied. You've been warned.

Concept

Sometime in December 2023 I decided to get going on a real-deal training server. I'd played around with AIs from the application side for a year or so, educated myself a fair bit on neural networks and LLMs, and was ready to get going.

At first, I thought about renting GPUs but that was kind of anathema to my usual way of doing things. If I was building for commercial purposes I could see doing that, but this is entirely a hobby for me. I enjoy hardware and really liked the idea of having a rack of GPUs I could put my eyes on and tuck into bed at night so a DIY solution was in order.

After a lot of research I concluded that I wanted to run 4090s, as they have a lot of features such as fp8 and they test very well — beating all other consumer cards at the time of this writing in overall training throughput and throughput/$ according to Lambda Labs.

Mainboard

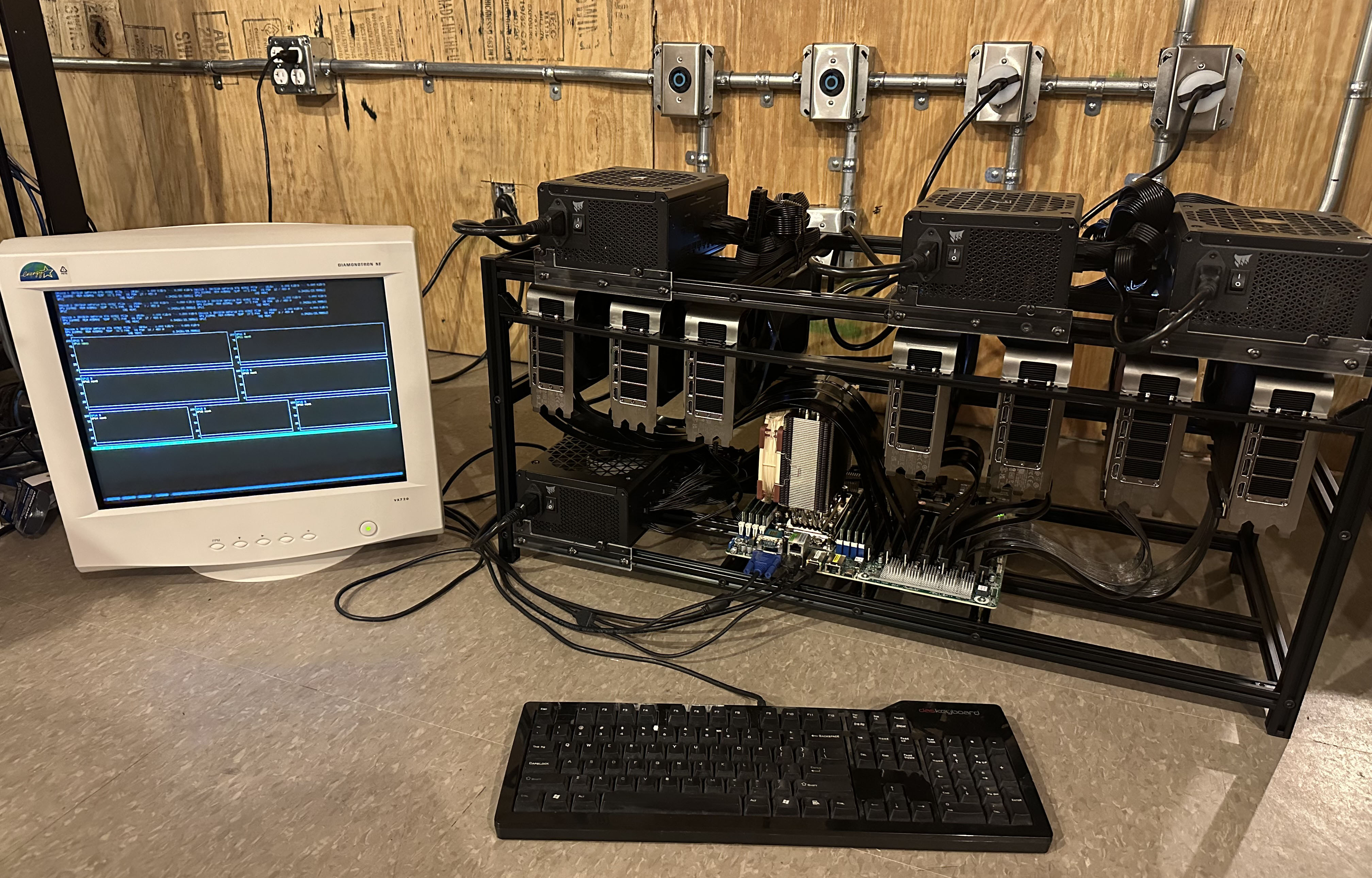

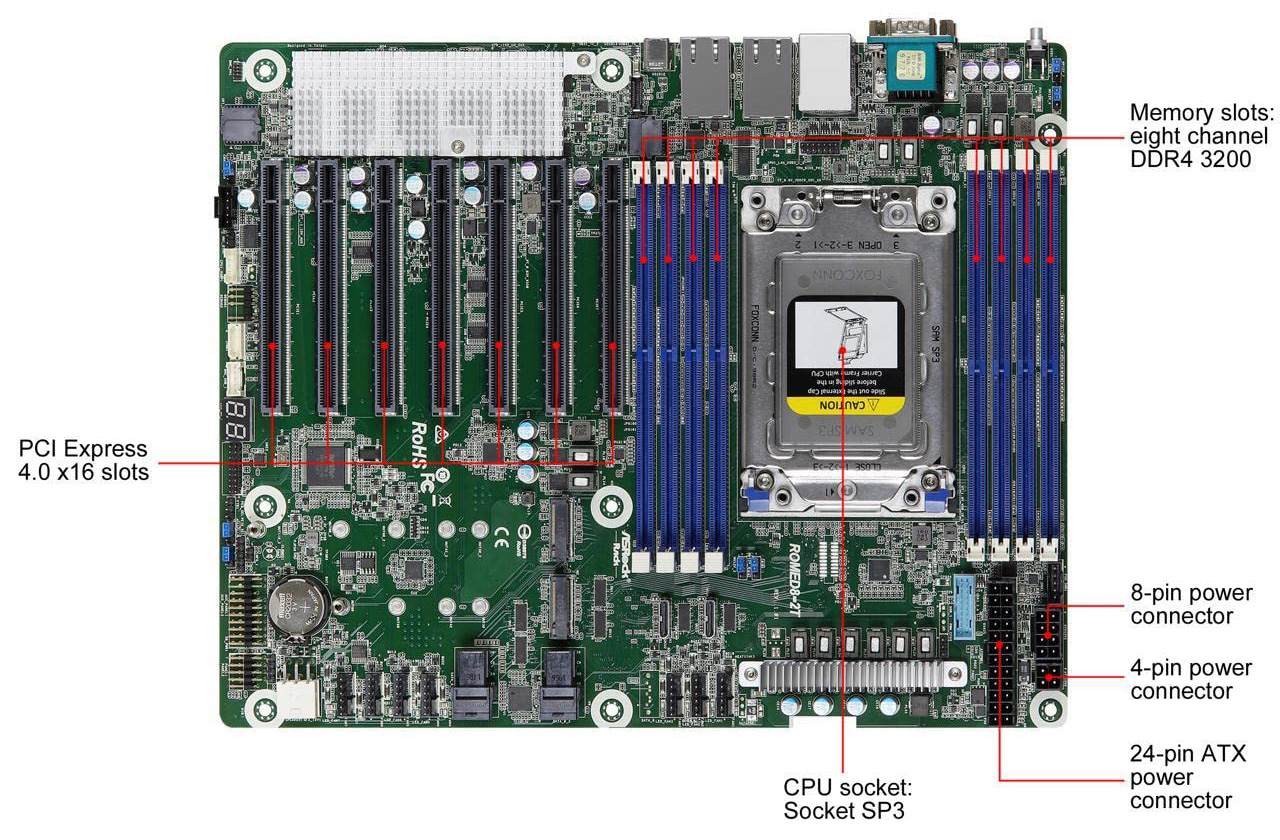

The follow-up question was, which host system? I decided I wanted as many cards as possible without resorting to bifurcation or multiplexing— all cards running at their rated PCIe 4.0x16. That requires a lot of PCIe lanes. After searching around, I came across this 7-slot legend, the ASROCK Rack ROMED8-2T:

This board will run an AMD Epyc 7002/7003 series CPU, which supports 128 lanes of PCIe. In short, that means all 7 slots(!) can get full PCIe 4.0x16 connectivity. But more on that later, it gets tricky.

CPU

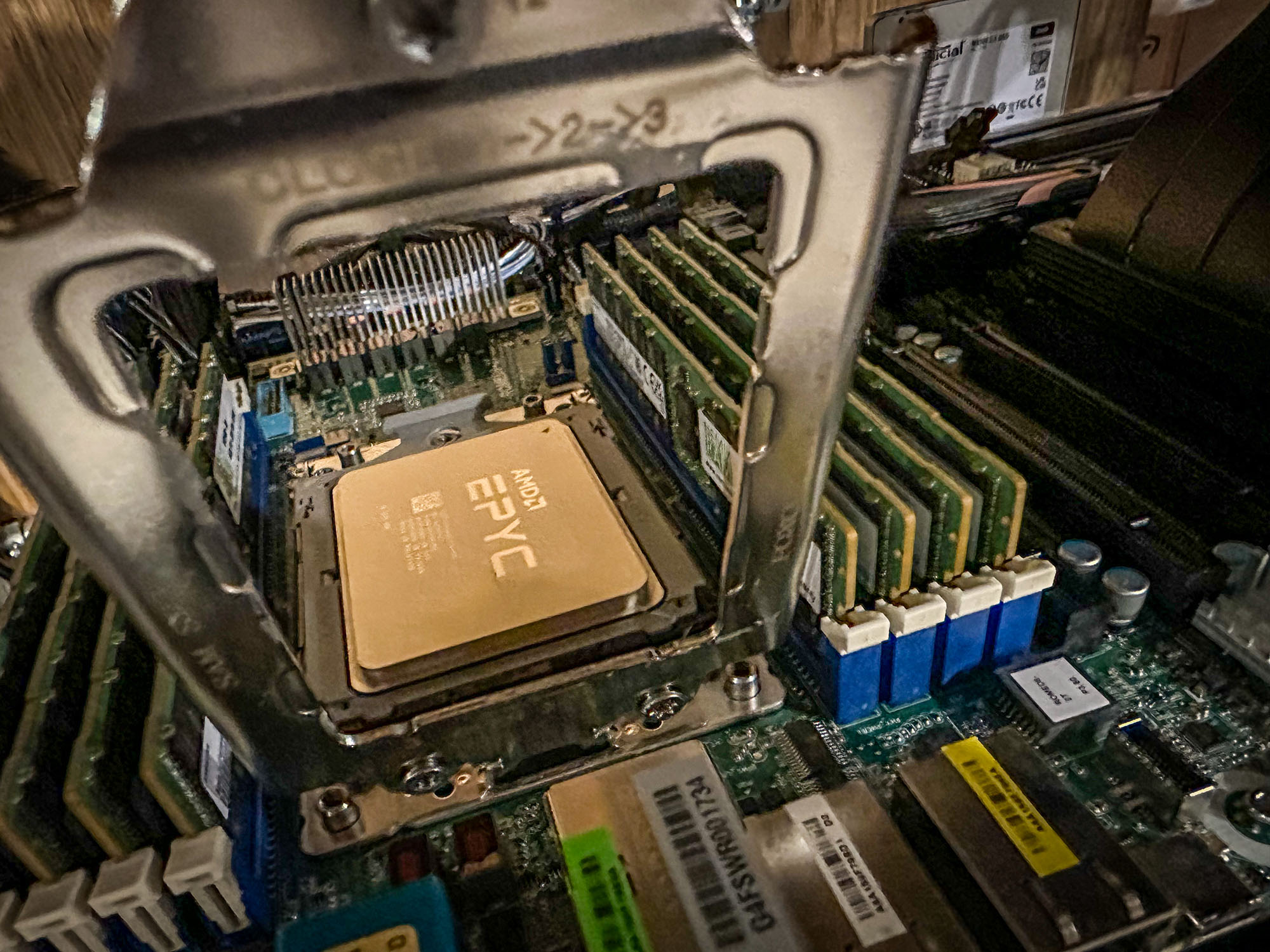

As mentioned, the board supports Epyc 7002 (Rome) and 7003 (Milan). With that in mind, I went shopping. I thought it'd be cool to have the 64-core monster 7763 and figured a brute of a CPU would be important to keep data moving to the GPUs. A new 7763 runs a couple grand though, so I decided to check eBay. On there I found an "Engineering Sample" chip from China for about half the price. Part number: 100-000000314-04

I'd read a little about Epyc engineering samples and often they're not much different than a normal CPU while being overclockable. So, Buy it Now right? Well, this CPU is a little strange in that it's underclocked: 1.6GHz (3.0GHz boost) vs 2.0/3.2GHz. Notably, it also doesn't appear to be overclockable. That said, it's half the price of the actual 7763 so still a deal - as long as it arrived in functioning condition.

And it did. Does pretty well actually, and in addition to 64 cores we get the usual 8-channel DDR4 with a theoretical 170GB/s of memory bandwidth. That makes it no slouch in the LLM inference department, laying down ~16 tok/s for Mixtral Q4_K_M - just on CPU:

It's worth noting that I also acquired a little 8-core Epyc 7262 just to try out in case I could recommend a much cheaper CPU to folks. This was not enough CPU for the job. In training tests, performance on the 7763 was 25% better than the 7262. There's an ideal CPU for the job, I don't think the 7763 is required, but I'll leave that to someone else to test. I do know others are running a 28-core with good success so that may be a good option.

A note on engineering sample chips: some are vendor locked, meaning they only work in say, a Dell server. At the time of this writing, the seller linked in the BOM advertises and sells unlocked chips and has good reviews. All 3 times I've ordered from them they've shipped very fast, with the CPU getting to me from China in about 3 days.

RAM

So being a server/workstation platform, the ROMED8-2T requires something more than the garden-variety DIMMS you might find at your local computer shop. In short, it supports three varieties of RAM: RDIMM, LRDIMM, and NV DIMM.

NV DIMMS are kind of rare. The NV stands for non-volatile, which means in the event of a power failure they'll maintain their data. I mention this for trivia only, quite unlikely you'd want to use them.

RDIMMs and LRDIMMs are fairly similar. The 'R' in RDIMM stands for Registered. Which means they have a buffer on them that improves signal integrity by reducing the electrical load on the system. LRDIMMs are Load Reduced DIMMs, and have additional buffers that let you run more memory on a system by reducing that load further. They're usually a bit slower though as the additional buffers add latency.

The ROMED8-2T supports up to 64GB modules with RDIMM. With 8 slots, that means you can have up to 512GB RAM. That ought to be plenty, so plan on shopping for plain RDIMMs. How much RAM is up to you, so consider the memory requirements for your models and what you'll be doing with them and add some for system overhead. To get the full 8-channel memory bandwidth out of the Epyc you'll want to populate all 8 DIMM slots so have that in mind.

For my build, I went with 512GB (8x64GB RDIMMS).

With the core of the build decided, it was time to get to the rest of it.

| < Home | Risers > |

| Nathan Odle - <nathan.odle@gmail.com> @mov_axbx on X |